· Dan · Guides · 4 min read

Hosting n8n for MSPs

Host n8n on Railway for a resilient setup with minimal ops.

Self-hosting n8n gives you the most flexibility. Many nodes for the MSP industry don’t have built-in support on n8n cloud.

However, it’s not hard to self-host. Here’s instructions so simple any tier 1 can set it up.

Why Railway

If you’re like us, you have free Azure credits, at least one person who knows AWS, and already have a few things on Digital Ocean or other VPS providers. So why add another? Time and money. First, Railway only charges for active CPU resources. If your system is idle (like most workloads), you probably won’t pay much. Our very active setup typically uses $6/month of credits. Everything (deployments, logs, databases, secrets) lives in a single dashboard. You don’t need to know how anything about IAM, VPCs, Linux, Docker or Kubernetes and you never need to see a console or edit a config file. Domain names, SSL, reverse proxies and load balancing are automatic and the default. Automated database backups are even included at the $20/month usage plan giving you plenty of overhead for more things to play with. Think about the cost of your Tier 3 tech, your sleep, then consider how many years of service you can pay for with just one “learning opportunity”.

The Setup

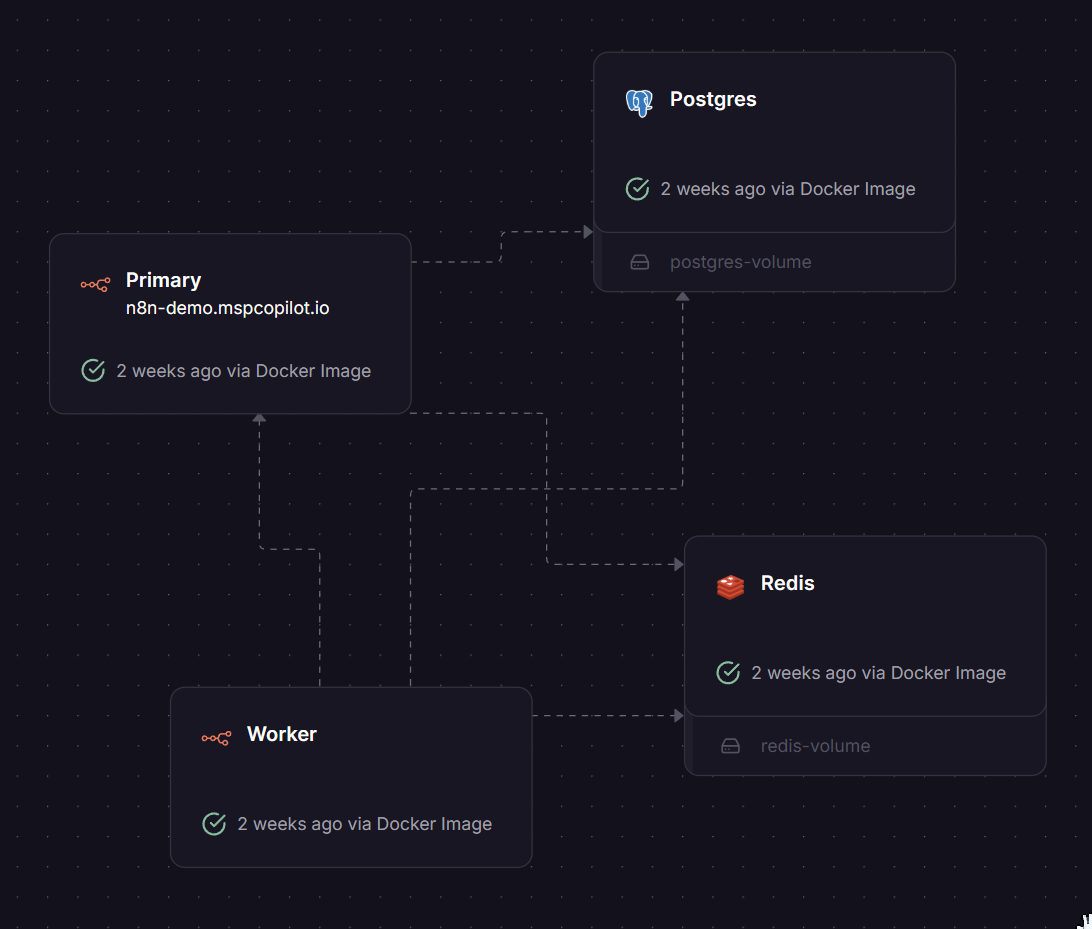

There are several n8n templates available on Railway, but here’s one that takes into account all the additional tweaks below:

There are simpler setups, but it doesn’t cost you much more (<$1/month) and it’s difficult to switch things like the database later.

Here’s the template to deploy: n8n Railway Template. This is not one of the published templates on railway.com.

Environment Variables

Here are the environment variables to consider which are a change from the default deployments.

Proxy settings: N8N_PROXY_HOPS="1" N8N_TRUST_PROXY="true"

These let n8n know to trust the Railway proxy service adjusting things like reported IPs, rate limiting, etc. If you ever add an additional proxy, increase the count to 2.

Community package support: N8N_REINSTALL_MISSING_PACKAGES="true"

Because the typical Railway configuration doesn’t utilize any local storage (welcome to the new world), n8n community nodes need to be reinstalled upon load. This does come with an extra level of care when choosing what nodes to install.

Code node functionality:

NODE_FUNCTION_ALLOW_BUILTIN="crypto" This allows you to utilize the crypto module in code nodes. It’s already included, but this lets you do things like hash in a code node.

NODE_FUNCTION_ALLOW_EXTERNAL="dotenv,lodash,showdown,html-to-text" These are optional external node modules you can use in a code node. These are the ones we use. All of them are optional.

QUEUE_HEALTH_CHECK_PORT=true for Workers to enable the /healthz check.

Zero Downtime Upgrades

Want to restart or upgrade the system live without interrupting any workflows?

Enable Teardown. I set Overlap to 15 seconds and Draining to 300 seconds (5 minutes).

When you initiate a shutdown, it will signal n8n to shutdown and give it 300 seconds to wrap up. In the meantime, it will startup a new instance, and when that is confirmed online, start routing requests there instead.

It’s completely unnecessary for most setups, but if you want multiple workers (or even primaries) for redundancy or load, you just need to change the number of instances. They will automatically spin up and process inbound requests from the Redis queue. Best of all, the added cost is minimal since they are idle most of the time.

The template has the Healthcheck Path of /healthz/readiness set for both the primary and worker nodes. This prevents a bad rebuild from taking down your system.

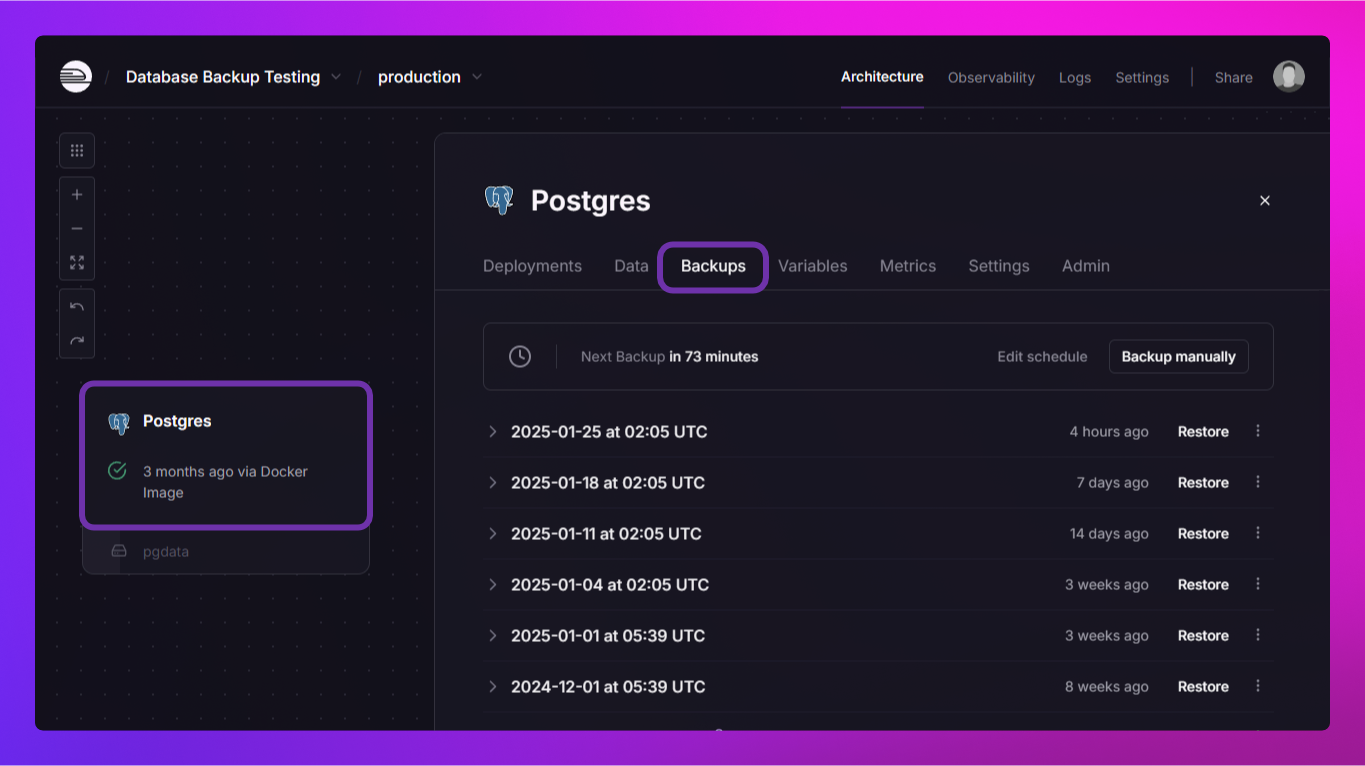

Backups

For basic backups, you can use the ones built-in to Railway. You only need to backup the Postgres volume. Open the Backups tab and choose Edit schedule and check off one or more options.

For more clever backups of the workflows, see this n8n to Github workflow.

Custom URL

You can start playing right away, but I recommend setting up a custom domain. Navigate to the Settings tab of the Primary service. Under Networking choose Custom Domain and enter that in. Railway will automatically setup the port. If you use Cloudflare proxy, it will automatically detect and adjust.

Going Further

It’s not necessary and will only save pennies, but you can trim down to a single worker by deleting your Worker, and adjusting this environment variable: EXECUTIONS_MODE=regular. This is easy to undo later.

OFFLOAD_MANUAL_EXECUTIONS_TO_WORKERS="false" This setting is being deprecated, and not recommended, but I like extra second or two it saves when testing nodes. It does mean you could lock up your primary instance if you make an error.